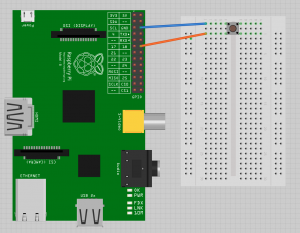

I ordered on Amazon a pack of buttons, 3 breadboards, F-M wires, 2 protractors, and the Waveshare fisheye camera. I already have a Raspberry Pi B (2011).

I installed the camera and button and used two scripts to enable me to take a photo when the button is pressed.

As per the instructions on raspberrypi.org, I started with a simple Bash file to take pictures and save them with the date/time as the filename.

camera.sh

#!/bin/bash

DATE=$(date +"%Y-%m-%d_%H%M%S")

raspistill -o /home/pi/camera/$DATE.jpgThe rapistill command triggers the camera, opens a preview of the camera’s view, and takes a photo.

I then created a python script to take action based on the press of the button, which is connected to pin 18.

switch.py

import RPi.GPIO as GPIO import time import os GPIO.setmode(GPIO.BCM)GPIO.setup(18,GPIO.IN,pull_up_down=GPIO.PUD_UP)whileTrue:input_state=GPIO.input(18)ifinput_state==False:('Button Pressed')os.system("/home/pi/camera.sh")time.sleep(0.2)

This script, when run (sudo python switch.py) executes camera.sh and prints “Button Pressed”.

From this I learned that the camera takes approximately 3 seconds to take a photo, and it displays a preview of the image during this time. This delay will be an important consideration if I decide to build a rotating platform. It was very easy to connect the camera and take a picture with this setup, but the camera is also easy to loosen, so I’ll have to be careful moving forward to ensure it always remains properly connected. To get the photos off of the Pi, I need to power it down, remove its SD card and plug the SD card into my Mac. Natively OSX doesn’t support the EXT filesystem used by the Pi, so the partition that houses everything I care about can’t be seen. As a work around, I can open the SD card on my Ubuntu virtual machine, which allows me to read and write to the card. I’m looking into plugins/applications such as OSXFuse to provide me with this functionality within OSX.